Were you surprised by the election results? If not, you were in the minority. Most professional pollsters had Clinton winning by anywhere from 3% to 11%. We can chastise their incorrect results, but first we need to consider the accuracy of our own decision making and what we can do to increase precision in the future.

When Trump won the election, I immediately bashed the forecasters; they were all so certain of a Clinton win and had been for weeks—on the night of the election, Nate Silver of FiveThirtyEight predicted a 71% probability of Clinton winning, and out of 21 possible scenarios, Election Analytics had only one where Trump could prevail. Then I read an article on Defense One and it began to make sense.

The output of polling results is only as good as the data put into the model. And unfortunately, this data is manipulated by people. According to Kalev Leetaru, a big data specialist who discovered the location of Osama Bin Laden through a statistical analysis of news articles, the problem with the election polling was tied to flawed judgment about which data was relevant.

When it comes to the kinds of questions that intelligence personnel actually want forecasting engines to answer, such as ‘Will Brexit happen’ or ‘Will Trump win,’ those are the cases where the current approaches fail miserably. It’s not because the data isn’t there. It is. Is because we use our flawed human judgment to decide how to feed that data into our models and therein project our biases into the model’s outcomes.—Kalev Leetaru

For the most accurate election predictions, the best indicator was from the artificial intelligence system, MogIA. Through it’s 20 million data points pulled from online platforms (Google, YouTube, Twitter, etc), MogIA has successfully predicted the last four presidential elections. Why? Because as per its developer,

While most algorithms suffer from programmers/developer’s biases, MoglA aims at learning from her environment, developing her own rules at the policy layer and develop expert systems without discarding any data.—Sanjiv Rai, founder of Genic.ai

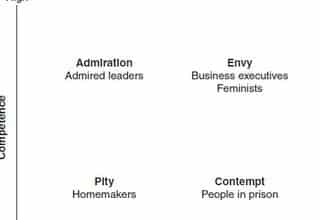

People are chalk-full of biases that distort how information is absorbed and comprehended. One of the more common biases is motivated reasoning, where we interpret observations to fit a particular pre-conceived idea. Psychologists have shown that much of what we consider to be reasoning is actually rationalization. We have already made the decision about how to react, so our reasoning is really cherry-picking data to justify what we already wanted to do.

All leaders (and people) are susceptible to these types of biases. How many times have you hired someone only to find that they are not the same person you interviewed? Sure, they look the same, but the intelligent, driven professional you met is starkly different from the person you are know working with. Somehow your intuition led you down a wrong path. Rationalize it as the result of outside forces, but you decided who they were within five minutes of interviewing and then looked for proof to support your gut.

To remove some of the bias, aptitude tests have been found to be highly predictive of performance, as have general intelligence tests and behavioral assessments. Interviews, however, are far less likely to foretell who will succeed. Research from Society for Judgment and Decision Making found that people make better predictions about performance if they are given access to objective background information and prevented from conducting interviews entirely.

If we want to make the best decisions for our organizations, we cannot rely solely on intuition, nor can we dismiss our instincts. There must be a balance between logic and perception. This begins with collecting and analyzing data is the most objective manner possible. Once we understand the facts, we can consider the less tangible factoids before coming to a final decision.

If this past election teaches anything, it’s that we cannot fall victim to our cognitive biases. Something feeling correct does not make it correct. Find ways to avoid being emotionally or intellectually invested in the findings; that’s the best way to keep an open mind. Include others who will challenge your biases and have no preconceived notions, like an independent contractor. Or maybe we should try to concern ourselves more the actualities and lean away from trying to predict outcomes. I’ll try to remember that in the mid-terms.